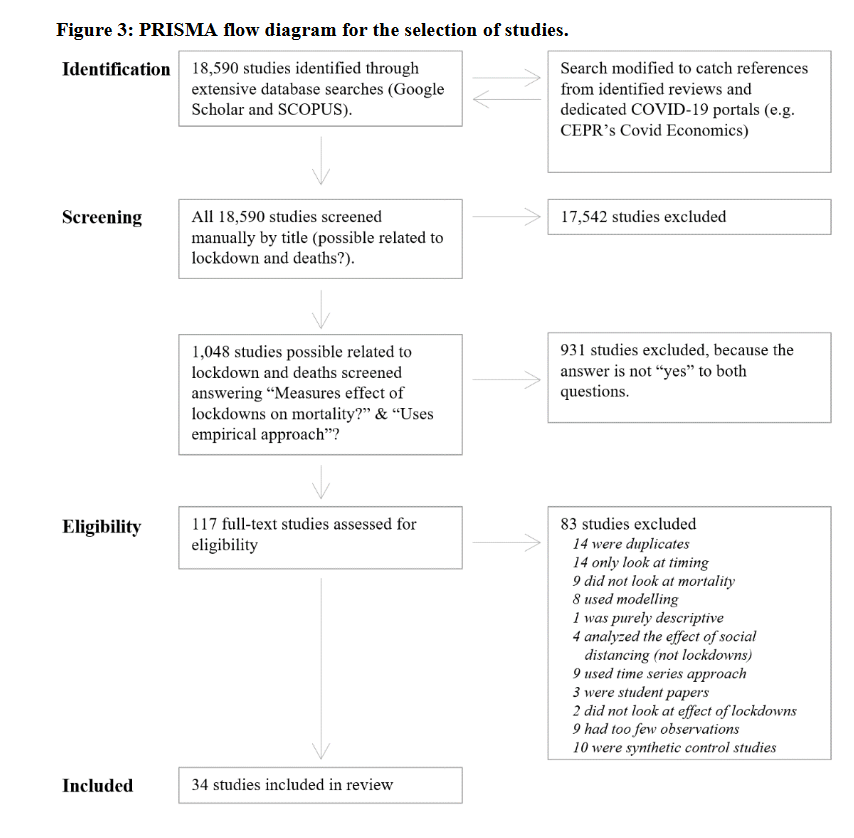

My partner sent me this article last night. "Please critically appraise it!" he said. "I'm not great at critical appraisal, I just like to look at the search...." I'll start with the search, anyway. A thread 🧵🧵🧵

sites.krieger.jhu.edu/iae/files/2022…

There is so much to say about this article. This pre-print. Scanned. They SCANNED Google Scholar and Scopus. We all know about the kinds of stuff one can find in Google Scholar. What method did they use to scan it? We'll never know.

Three economists wrote a paper about COVID-19 mortality and didn't think to look in PubMed. Or consult an information specialist. 1,360 search terms? Where are they? They're not anywhere in this paper. They also limited to English for a global pandemic -- doesn't make sense.

Doesn't sound like they used "COVID19" or "fatality/ies", lockdown = quarantine? shutdown? They were "comfortable" that Google Scholar and Scopus found everything.

Here's their footnotes (all text in ALT)

They included everything from a publication called "COVID Economics," just because. "The papers are vetted by Editors for quality and relevance. Vetting is different from refereeing in the sense that the decision is up or down, with no possibility of revising and resubmitting."

Note that that does not = peer review. Who are these editors?

cepr.org/content/covid-…

At what point did they de-duplicate? I think they didn't, because they only "scanned."

I don't see ANY of their included articles coming from public health, health, or medical journals. They're from economics, management, and psychiatry journals.

I also don't see much quality appraisal/risk of bias happening. They kinda gloss over it. Seems like there's a fair amount of selection bias, if I had to guess.

I'll stay away from critically appraising the analyses, because that's not my expertise, but nothing -- NOTHING -- about the search is replicable or transparent.

There are also glaring conflicts of interest. And the author is using the logo of his prestigious institution to splash all over the front of the preprint. Gives it credibility to the media, you know? Because they're going wild about it.

If we are to take away something from this, it's the same-old-same-old that I always say: ✅ work with an info specialist ✅ understand what you're getting into & how to do it ✅ follow reporting and conducting guidelines for the chosen methodology -- they exist What else?

Just mentioning "following PRISMA" or whatever isn't a sign of quality. Although it seems like a lot of authors think it is. My friends, I've got things to do.//